If you have only a few minutes to spare, here’s what you should know.

-

OpenAI has four GPT-3 model versions: Ada, Babbage, Curie, and Davinci. Ada is the smallest and cheapest to use model but performs worst, while Davinci is the largest, most expensive, and best performing of the set.

-

GPT-3 Davinci is the best performing model on the market today. It has been trained on more data and with more parameters than its open source alternatives, GPT-Neo and GPT-J.

-

GPT-J generally performs better than the smaller versions of OpenAI’s GPT-3 models, Ada and Babbage, but not quite as well as Davinci.

-

GPT-Neo and GPT-J are open source and free to use, and both are good alternatives to OpenAI’s GPT-3 for users for whom cost is a constraint.

-

GPT has broad applications in industries such as entertainment, advertising, information technology, software development, and more. The availability of open source GPT-3 alternatives makes this tech more accessible and affordable for users, which is a welcome development.

-

GPT-3 is remarkable but fails spectacularly at times and has a long way to go before it can handle tasks such as open-ended chat.

This post is sponsored by Multimodal, a NYC-based development shop that focuses on building custom natural language processing solutions for product teams using large language models (LLMs).

With Multimodal, you will reduce your time-to-market for introducing NLP in your product. Projects take as little as 3 months from start to finish and cost less than 50% of newly formed NLP teams, without any of the hassle. Contact them to learn more.

“A robot wrote this entire article. Are you scared yet, human?”

This was the title of a Guardian op-ed published in September 2020. And as it suggests, the author was a robot — a natural language processing model developed by OpenAI. This "micro robot" is known as GPT-3. Released on June 11, 2020, GPT-3 has become the world’s most talked-about language generator.

Although GPT-3 was one of the only models of its kind when it debuted in 2020, today there are several alternatives such as GPT-Neo and GPT-J. How do these alternatives compare to GPT-3? Are they better or worse or just different?

Let’s explore each of these questions.

The abbreviation GPT stands for generative pre-training. Since 2018, OpenAI has used this deep learning method to train language models. This method involves training a model on large amounts of data in order to improve its ability to predict the next most probable word in a sentence.

Using this and a brain-like neural network called Transformer, OpenAI first released GPT in 2018, followed by GPT-2 in 2019 and GPT-3 in 2020. Each successor was trained on more data and with more parameters and was better fine-tuned than the last.

GPT-3 has been trained with 175 billion parameters — a number ten times greater than what its predecessor, GPT 2, was trained with. GPT-3’s text generations are quite stunning. It can translate from language to another, recognize named entities within text, summarize articles, and compose full-length pieces.

GPT-3 currently comes in four versions. Ada is the smallest and cheapest, while Davinci is the largest and most expensive. Below are the details of each model along with pricing.

Users can input simple text commands into GPT-3, and the model produces mostly coherent results. GPT-3 can be pretty creative, too, writing everything from fiction to poetry in the style of Shakespeare, Robert Frost, Burns, or any other renowned poet.

Such capabilities come at a huge price — literally. GPT-3 is not open-source. It is available via OpenAI’s API, but the API is extremely expensive. Although high costs have limited GPT-3’s mainstream adoption, researchers and professionals actively build prototypes and commercial applications using it today.

In the last few years, several startups have developed their own alternatives to GPT-3. Developing a system like GPT-3 requires a massive upfront investment. Despite such obstacles, companies like EleutherAI have developed and released open source language models to compete with GPT-3.

Many of these models are not as powerful as the largest versions of GPT-3 (such as Curie and Davinci), but they perform just as well as the simpler GPT-3 models (such as Ada and Babbage).

Here’s a summary of the popular open-source alternatives to GPT-3.

GPT-Neo is an open-source alternative to GPT-3 and is publicly available. The model was developed by EleutherAI, a decentralized group of AI researchers and scientists founded in 2020. EleutherAI’s mission is to make AI more accessible by developing and releasing open source models.

Since EleutherAI did not have access to a dataset as extensive and diverse as that of OpenAI, it sourced its own 825 gigabyte dataset called "The Pile." This dataset comprises data from academic sources like Pubmed, common websites like Wikipedia and Github, and even subtitles from films and TV shows. Since it did not have private computing resources to develop its models, EleutherAI used cloud computing resources from Google's TensorFlow Research cloud and CoreWeave cloud.

EleutherAI also developed and released a second, larger version of the model called GPT-J, which has more parameters and performs better on NLP tasks than its smaller, earlier predecessor, GPT-Neo. GPT-J was trained on 6 billion parameters and can perform tasks such as story writing, informational retrieval, translation, and code generation.

EleutherAI also developed a simple user interface for GPT-J. This interface allows users to increase the level of creativity of the model by using a parameter known as “temperature.” Moreover, the interface has built-in prompts for users to demo the model’s capabilities.

EleutherAI used a JAX-based (a Python library frequently used for machine learning projects) Transformer to develop GPT-J. The model was trained on the same dataset as GPT-Neo.

While GPT-Neo and GPT-J are the best known alternatives to OpenAI’s GPT-3, other companies are developing their own GPT-3 variants. Some of these variants may overtake GPT-Neo and GPT-J in popularity. For example, Cohere, a Canada-based AI lab, has begun to release a series of language models. Another company called AI21 labs released Jurassic-1, a model trained on 178 billion parameters. This model rivals the size of OpenAI’s largest GPT-3 version, Davinci.

While these others are worth monitoring, GPT-J and GPT-Neo are the most popular open source alternatives to GPT-3 today.

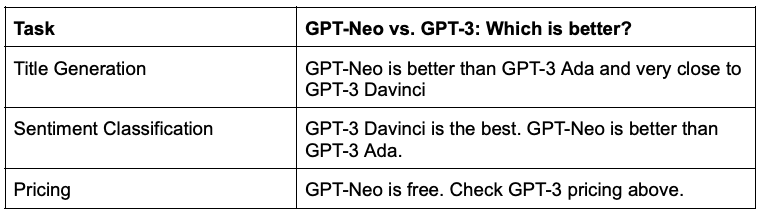

Georgian, a fintech company, recently compared the performance of GPT-Neo against GPT-3. Here’s the key takeaway: GPT-Neo is better than OpenAI’s smallest model, Ada, but not quite as good as OpenAI’s largest model, Davinci. That being said, GPT-Neo is free, while GPT-3 Davinci is very expensive.

GPT-Neo vs. GPT-3 on Title Generation Task

The Georgian team asked GPT-Neo and GPT-3 to generate blog post titles given the following prompt: The post describes the process for taking thoughtful meeting notes. The author covers how he trained himself to remember what is happening during long meetings and suggests actionable steps to integrate the note-taking habit in your life.

Here are the results.

GPT-Neo performs better than GPT-3 Ada but not quite as well as GPT-3 Davinci. For example, GPT-Neo generated titles such as "Taking Notes During Meetings," whereas GPT-3 Davinci generated titles such as “How to take notes from meetings like a boss.”

GPT-Neo vs. GPT-3 on Sentiment Classification Task

The results on sentiment classification were similar: GPT-Neo outperformed GPT-3 Ada but could not match the performance of GPT-3 Davinci. Check out more detailed results here.

Price Comparison

GPT-Neo is open-source and free. GPT-3 Davinci, on the other hand, is expensive. For the absolute best performance, OpenAI still wins, but users with limited budgets or large machine learning jobs should consider GPT-Neo as an alternative.

Several developers have conducted tests between GPT-J and GPT-3. These tests involved both zero-shot tasks (tasks where no labeled examples are provided to the model) and multiple shot tasks. Here are the key findings:

-

In zero shot settings, there is not a noticeable difference between the performance of GPT-J and GPT-3.

-

GPT-J’s hardware performance is on par with GPT-3 Babbage.

-

GPT-J handles chatbot conversations better than GPT-3.

-

GPT-J is superior to GPT-3 at Python code generation.

-

GPT-J is free, a major selling point.

GPT-J Works Similarly to GPT-3 in Zero-Shot Settings

When it comes to zero-shot tasks, GPT-J performed very closely to GPT-3. Aran Komatsuzaki, the individual who performed the tests above, also noticed the GPT-J was far more efficient in hardware performance than GPT-Neo. GPT-J had hardware performance similar to GPT-3 Babbage.

GPT-J Has Better Chatbot Abilities than GPT-3

According to Max Woolf, GPT-J is better at code generation than GPT-3. Note that these tests were from the middle of 2021, and GPT-3 Davinci was not available then. GPT-3 Davinci may now rival or exceed the performance of GPT-J.

GPT-J is Better in Python Code Generation

Woolf also saw similar results for Python code-generation.

Price Comparison

Just like the GPT-Neo, GPT-J is also free.

The primary benefit of having GPT-3 alternatives is affordability. Free-to-use open source language generators make natural language processing accessible to more researchers, companies, and other organizations. Also, competition is healthy for the market. Having alternatives to OpenAI’s GPT-3 will increase the pace of improvement.

Developers of language models such as the GPT-Neo and GPT-J have done a great job making the technology affordable and widely accessible. Let us know what you think about these open source alternatives in the comments below.

Subscribe to get full access to the newsletter and website. Never miss an update on major trends in AI and startups.

Here is a bit more about my experience in this space and the two books I’ve written on unsupervised learning and natural language processing.